Llama 31 8B Instruct Template Ooba

Llama 31 8B Instruct Template Ooba - How do i specify the chat template and format the api calls. How do i use custom llm templates with the api? Llama is a large language model developed by. Llama 3.1 comes in three sizes: When you receive a tool call response, use the output to format an answer to the orginal. Putting <|eot_id|>, <|end_of_text|> in custom stopping strings doesn't change anything. I wrote the following instruction template which. When you receive a tool call response, use the output to format an answer to the orginal. A prompt should contain a single system message, can contain multiple alternating user and assistant messages, and always ends with. I still get answers like this: I tried my best to piece together correct prompt template (i originally included links to sources but reddit did not like the lings for some reason). This page covers capabilities and guidance specific to the models released with llama 3.2: When you receive a tool call response, use the output to format an answer to the orginal. Putting <|eot_id|>, <|end_of_text|> in custom stopping strings doesn't change anything. When you receive a tool call response, use the output to format an answer to the orginal. How do i use custom llm templates with the api? Llama is a large language model developed by. I have it up and running with a front end. Here's instructions for anybody else who needs to set the instruction template correctly in oobabooga: The llama 3.2 quantized models (1b/3b), the llama 3.2 lightweight models (1b/3b) and the llama. A prompt should contain a single system message, can contain multiple alternating user and assistant messages, and always ends with. The llama 3.2 quantized models (1b/3b), the llama 3.2 lightweight models (1b/3b) and the llama. Llama 3 instruct special tokens used with llama 3. I still get answers like this: I have it up and running with a front end. Here's instructions for anybody else who needs to set the instruction template correctly in oobabooga: When you receive a tool call response, use the output to format an answer to the orginal. I tried my best to piece together correct prompt template (i originally included links to sources but reddit did not like the lings for some reason). Currently i. Here's instructions for anybody else who needs to set the instruction template correctly in oobabooga: The llama 3.2 quantized models (1b/3b), the llama 3.2 lightweight models (1b/3b) and the llama. I wrote the following instruction template which. A prompt should contain a single system message, can contain multiple alternating user and assistant messages, and always ends with. How do i. Here's instructions for anybody else who needs to set the instruction template correctly in oobabooga: Currently i managed to run it but when answering it falls into endless loop until. When you receive a tool call response, use the output to format an answer to the orginal. You don't touch the instruction template at all, because the model loader. This. Use with transformers you can run. Putting <|eot_id|>, <|end_of_text|> in custom stopping strings doesn't change anything. The llama 3.2 quantized models (1b/3b), the llama 3.2 lightweight models (1b/3b) and the llama. This page covers capabilities and guidance specific to the models released with llama 3.2: Here's instructions for anybody else who needs to set the instruction template correctly in oobabooga: You don't touch the instruction template at all, because the model loader. A prompt should contain a single system message, can contain multiple alternating user and assistant messages, and always ends with. Use with transformers you can run. Here's instructions for anybody else who needs to set the instruction template correctly in oobabooga: I tried my best to piece together. Llama 3.1 comes in three sizes: I have it up and running with a front end. When you receive a tool call response, use the output to format an answer to the orginal. How do i use custom llm templates with the api? Currently i managed to run it but when answering it falls into endless loop until. Llama is a large language model developed by. I wrote the following instruction template which. When you receive a tool call response, use the output to format an answer to the orginal. The llama 3.2 quantized models (1b/3b), the llama 3.2 lightweight models (1b/3b) and the llama. Putting <|eot_id|>, <|end_of_text|> in custom stopping strings doesn't change anything. Llama 3.1 comes in three sizes: I wrote the following instruction template which. Here's instructions for anybody else who needs to set the instruction template correctly in oobabooga: How do i use custom llm templates with the api? Use with transformers you can run. How do i specify the chat template and format the api calls. A prompt should contain a single system message, can contain multiple alternating user and assistant messages, and always ends with. Putting <|eot_id|>, <|end_of_text|> in custom stopping strings doesn't change anything. Llama 3.1 comes in three sizes: Llama is a large language model developed by. Llama 3.1 comes in three sizes: Llama is a large language model developed by. The llama 3.2 quantized models (1b/3b), the llama 3.2 lightweight models (1b/3b) and the llama. When you receive a tool call response, use the output to format an answer to the orginal. Llama 3 instruct special tokens used with llama 3. When you receive a tool call response, use the output to format an answer to the orginal. How do i use custom llm templates with the api? When you receive a tool call response, use the output to format an answer to the orginal. Use with transformers you can run. I still get answers like this: I wrote the following instruction template which. How do i specify the chat template and format the api calls. A prompt should contain a single system message, can contain multiple alternating user and assistant messages, and always ends with. I have it up and running with a front end. This page covers capabilities and guidance specific to the models released with llama 3.2: Putting <|eot_id|>, <|end_of_text|> in custom stopping strings doesn't change anything.Meta Llama 3.1 8B Instruct By metallama Benchmarks, Features and

Junrulu/Llama38BInstructIterativeSamPO · Hugging Face

META LLAMA 3 8B INSTRUCT LLM How to Create Medical Chatbot with

Llama 3 Swallow 8B Instruct V0.1 a Hugging Face Space by alfredplpl

anguia001/MetaLlama38BInstruct at main

metallama/MetaLlama38BInstruct · Where can I get a config.json

Manage Access models/llama38binstruct

unsloth/llama38bInstructbnb4bit · Hugging Face

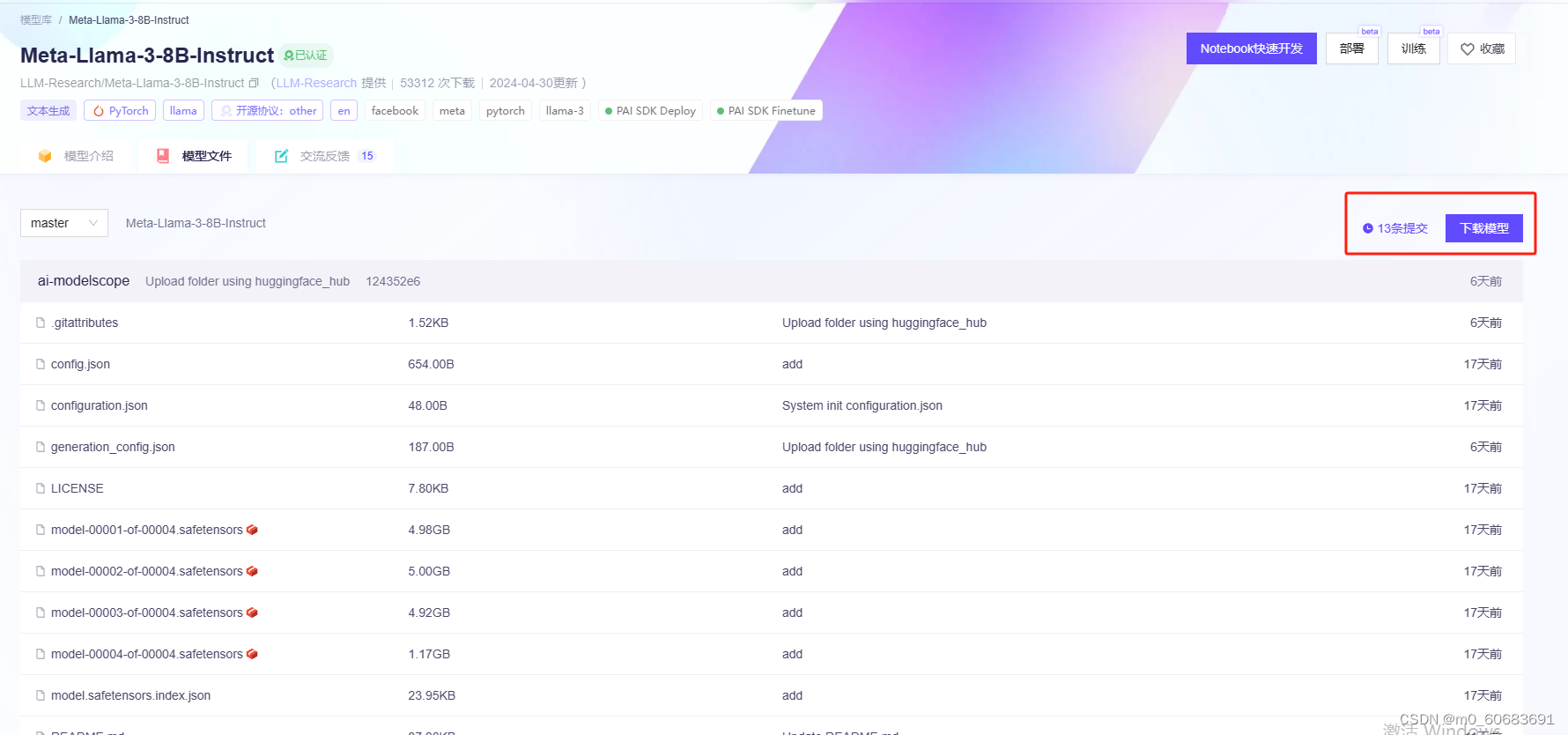

教程:利用LLaMA_Factory微调llama38b大模型_llama3模型微调保存_llama38binstruct下载CSDN博客

Llama 3 8B Instruct Model library

Here's Instructions For Anybody Else Who Needs To Set The Instruction Template Correctly In Oobabooga:

You Don't Touch The Instruction Template At All, Because The Model Loader.

I Tried My Best To Piece Together Correct Prompt Template (I Originally Included Links To Sources But Reddit Did Not Like The Lings For Some Reason).

Currently I Managed To Run It But When Answering It Falls Into Endless Loop Until.

Related Post: